Revolutionising warehouse data with AI-enabled autonomous mobile robots

March 20, 2024

The Dexory Autonomous Mobile Robot (AMR) scans up to 15,000 locations per hour, capturing over 36 TB of data as it autonomously navigates around a warehouse. This data is a mixture of high-resolution images and 3D point cloud information which is used to analyse the barcodes, size and shape of each pallet in a warehouse.

Processing this phenomenal amount of data on a mobile robot, in the chaotic environment of a warehouse with minimal network is a difficult challenge, but one that Dexory have tackled with a pioneering approach to software.

Synchronising sensors

The data journey from the autonomous robot sensor to software platform DexoryView, starts with accurate data capture. To achieve this, the myriad of sensors, lidars and cameras that enable the robot to localise, manoeuvre and scan, first need to be synchronised to the same time.

To ensure the data captured is as accurate as possible, first all the sensors need to be synchronised to the same timestamp

‘The lasers from each lidar send millions of points per second and we need to know exactly what time each of those measurements are taken so we can feed that into out localisation and navigation strategies,’ explains Matt Macleod, Head of Software at Dexory. ‘If these sensors are not synchronised correctly, they can gradually drift over time and introduce huge errors. So, while the robot is scanning, the central computer in the robot’s base continuously sends a timestamp to all the sensors with an accuracy of 100 nanoseconds.’

Accurate data capture

Once all the sensors are synchronised, the next step to accurately capturing data is for the robot to understand its exact position relative to its surroundings. Dexory’s unique localisation technique combines data from 3D cameras as well as 2D and 3D lidars to map the environment both horizontally and vertically around the robot. This not only ensures the robot navigates around a warehouse safely, avoiding obstacles but also helps to build a more representative digital twin model for the customer to use in DexoryView.

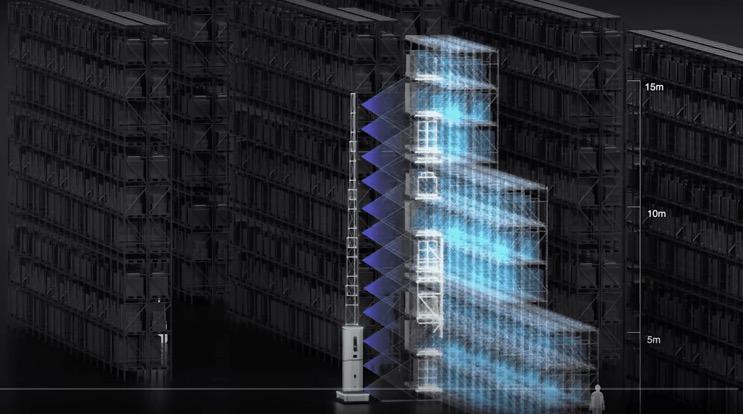

When the robot is ready to scan an aisle, its mechanical tower extends to 12 m and the lidars and cameras scan every location of each row within the rack simultaneously, as the robot moves down the aisle. The cameras capture high resolution images which are used to identify the barcodes of pallets, while the lidars record point cloud data which measures the volume of each rack location.

The tower is instrumented with lidars which capture point cloud data and 3D cameras which capture high resolution images

‘Each warehouse is different and can lead to different sources of noise in the data,’ explains Marcus Scheunemann, Head of Autonomy at Dexory. ‘There can be noise from the environment such as reflections on the racking from the sun, or if the racking is dark it can absorb some of the laser from the lidars, so less is reflected back which can make it difficult for the cameras to scan the barcodes. You can also get particles of dust or dirt that land on the surface of sensors which obstruct the field of view and cause the robot to think that obstacles are much closer than they actually are.’

‘Then there is the inherent noise within the sensors themselves due to the measurement technology that is never 100% accurate,’ continues Scheunemann. ‘Finally, you have systematic noise within the lasers where the beam can split when measuring corners of objects, leading to false assumptions. To compensate for all this noise, our approach is to mitigate the root cause of these errors and then use complex filtering techniques to ensure that only the most accurate data is fed to DexoryView.’

An example of this is the dust mitigation system Dexory have designed to regularly clean the lidars. This features a high-power fan which blows bursts of air over the surface of the lidars closest to the floor every hour. This ensures the lidars can accurately sense the environment and also avoids staff having to manually wipe the sensors.

Data filtering

After the data has been measured, it is then filtered using complex algorithms that extract the most reliable information from each data set whilst also mitigating any noise within the data.

‘Essentially we capture as much data as possible, filter what we need and then get rid of irrelevant data as soon as we can,’ highlights Macleod. ‘For example, when the lidars first scan the environment, we immediately filter out any measurement of the robot itself, because we’re not interested in that data. We then filter a step further and only focus on the rack we are interested in. Finally, we filter out any sources of noise or error before the data gets processed.’

The same approach is taken with the images captured by the 3D cameras on the tower. Once the image processing algorithms have identified the barcode from the images, they are discarded. This avoids storing, processing and uploading unnecessary data.

Processing data with a distributed computing network

Despite this relentless drive to downsize the data, there are still TBs of data that require processing. A fast and efficient technique to achieve this is called edge processing. This is where the workload is distributed across a network of computers, so that the data is stored and processed closer to its source, reducing latency.

‘We have designed each scanning rail to have its own CPU, so the load is split across these computers,’ reveals Macleod. ‘This means that each section of the tower handles its own data, reducing the amount of data that needs to be transmitted to the main computer in the base.’

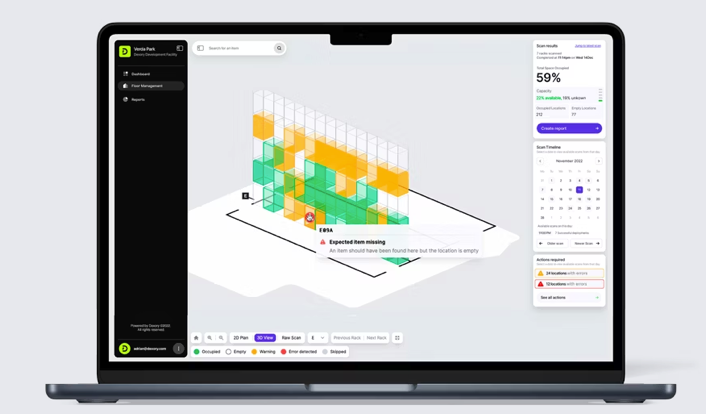

‘This base computer is responsible for aggregating these data streams to build an overall representative picture of the entire rack. So in the end, only this optimised data set is sent to the cloud for the customer to access through DexoryView, which is about 10% of what we originally capture.’

The processing workload is split across a distributed computer network where each scanning rail has its own computer

Uploading data to the cloud

The location of large warehouses often means they have intermittent WIFI and network signals at best, making uploading data to the cloud a significant challenge. This is another reason why Dexory has focussed so strongly on filtering out irrelevant data whilst also processing as much as possible on the robot itself so that only a refined data set is sent to the cloud.

This approach of streamlining the data at every step, combined with distributed processing is how Dexory can capture 250 TB of images and 2 TB of point cloud data per day, process it and upload it to the cloud in minutes, which is simply impossible to achieve with other warehouse scanning solutions.

‘One of the most impressive aspects about our robot is that we have a hardware device installed in warehouses which we can constantly send software updates to remotely,’ concludes Macleod. ‘This is very rare in the industrial space as typically software is first installed on the hardware device and that’s it. Whereas we have taken techniques from other applications and integrated them so that we can continuously add features, improve data quality and enhance the overall experience for the customer.’

Dexory’s approach to streamlining data helps make DexoryView a fast and intuitive platform for customers to use